Isabel Fischer, Leda Mirbahai, David Buxton, Marie-Dolores Ako-Adounvo, Lewis Beer, Mara Bortnowschi, Molly Fowler, Sam Grierson, Lee Griffin, Neha Gupta, Matthew Lucas, Dominik Lukeš, Matthew Voice, Lichuan Xiang, Yiran Xu, and Chaoran Yang

University of Warwick community of practice on AI

Cartoon of university Vice Chancellors, men and women from different ethnic groups, leaping forward

On 4th July, (a date of some significance) the 24 Vice Chancellors of the Russell Group universities published their guiding principles on the ethical use of generative AI. The statement aims to shape university institution and course-level work to support the ethical and responsible use of generative AI, new technology and software like ChatGPT. The statement recognises that AI usage is becoming common practice among university staff and students.

The new set of principles has been created to help universities ensure students and staff are ‘AI literate’ so they can capitalise on the opportunities technological breakthroughs provide for teaching and learning.

The five principles set out in the statement are:

- Universities will support students and staff to become AI-literate.

- Staff should be equipped to support students to use generative AI tools effectively and appropriately in their learning experience.

- Universities will adapt teaching and assessment to incorporate the ethical use of generative AI and support equal access.

- Universities will ensure academic rigour and integrity is upheld.

- Universities will work collaboratively to share best practice as the technology and its application in education evolves.

Whilst it is necessary (if overdue) for universities to collectively outline their position that generative AI should feature in education and research, it remains a challenge of how we achieve it. The statement outlines where we wish to be (i.e., AI literacy for students and staff and ethical use of AI in education and research). What remains is how we plan to achieve the goals and the timelines. For example, to achieve AI literacy requires significant upskilling of staff and respectively students. This requires resources, training and investment of time. In a world where AI is constantly evolving, having a clear strategy on how we plan to achieve our end goals is crucial. The goals can only be achieved by forming cross institute working groups and champions of digital transformation teams.

Over the last six months, a University of Warwick community of practice with over 50 members reviewed opportunities and risks of generative AI. The group was open to the entire Warwick community and was composed of students and staff, as well as members from other institutions and industry. To share best practice, the group has now captured their findings and made the current pre-print version available to others here.

The main aim of the report is to provide guidance to staff when preparing for the new academic year. It provides supporting insights, ideas and resources. Specifically, the report covers the following areas:

- The AI-enhanced Learning Environment – some big questions

- AI for Teaching and Learning, including tools for educators

- Academic Integrity and AI Ethics

- Assessment Design

- Dialogue using AI, including Formative Feedback

- AI as a driver of Digital Transformation in Education

The report is structured in three parts. The first part was written by students, the second by the collective group, and the third part consists of individual opinion pieces. Readers are invited to move between chapters, for example, if interested in a summary of links of useful tools for educators, go to Chapter 3.2; if wanting to learn how to use generative AI to prepare tables that might be helpful for neurodivergent students’ learning, go to Chapter 3.5.2; and if interested in the proposed policies for AI and academic integrity, start with Chapter 2.3 and continue with Appendix 3.

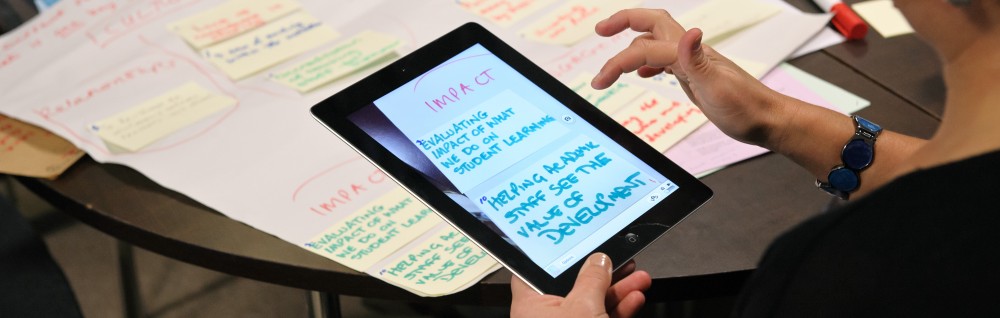

Finally, and we are turning our attention back to the Russell Group principles and to the image at the start of this blog, one Russell Group AI principle is certain, to stay on a par with industry in an increasingly AI-enabled world, (Higher) Education providers need to collaborate to make a leap forward that is not a directionless lurching. We look forward to hearing your comments on our report and to continue the journey together.

Isabel Fischer (lead author) is a Reader in Information Systems at Warwick Business School (WBS), researching and teaching in the area of AI and digital transformation. In the past six months Isabel led a community of practice on AI in education at the University of Warwick.

@Isabel_Fischer

Link is here:

Click to access rg_ai_principles-final.pdf

LikeLike

Pingback: Weekly Resource Roundup – 11/8/2023 |

Pingback: Crynodeb Wythnosol o Adnoddau – 11/8/2023 |