Higher Education experiences fads, some of which pass by unlamented. The buzzword at the moment is ‘student engagement’. Whether it is national bodies, student organisations, institutions, or teaching development units, everyone is pressing the ‘student engagement’ button. Even the recent government Green Paper on the future of higher education in England refers to student engagement. However, a bit like use of the term ‘student centred learning’, the term ‘student engagement’ has come to be used to refer to so many different things that it is difficult to keep track of what people are actually talking about. It also seems to be the case that good evidence about the importance of engagement of a particular form has been co-opted by those interested in promoting other forms of student engagement for which there is actually little or no evidence of impact. The term ‘engagement’ is used to sprinkle stardust on almost any related activity.

Small campus-based universities with full-time resident students are in a much better position to achieve high retention than are inner city universities with large classes, dispersed buildings and with many part time students living at home. The Open University is always near the top of National Student Survey rankings but always at the bottom of student retention rankings – so good teaching and good retention are achieved in rather different ways. As class sizes have increased, social cohesion has fallen away. Cohort size and class size both negatively predict retention. Decades ago Tinto described what needed to be done to improve retention as ‘academic and social integration’. Explanations of this phenomenon have been largely sociological rather than pedagogical and only recently has the term ‘engagement’ been used to refer to it. Efforts to improve this kind of student engagement might revolve around the Students’ Union, around the provision of social learning spaces open late in the evening, or peer mentoring schemes from more senior students from the same social or ethnic background. Such efforts often attempt to put in place formally what comes about informally in small, residential, socially coherent institutions. It may not involve teachers at all.

Then came Astin’s vast studies on what it was about spending three years at College that made any difference to what students learnt. Repeated in subsequent decades, the studies consistently identified the same teaching, learning and assessment practices that predict learning gains, such as close contact with teachers, prompt feedback, clear and high expectations, collaborative learning and ‘time on task’. What these practices have in common is that they engender ‘engagement’ with learning, and it is student engagement that has been found to predict learning gains, not such variables as class contact hours or research prowess. Here ‘engagement’ is about students’ engagement with their studies, not with their social group or their institution, and the focus is clearly on pedagogic practices.

The key variables that make up engagement have been captured in a questionnaire, the National Survey of Student Engagement, that is used throughout most of the US and in various forms round the world. When US teachers refer to ‘engagement’ this has become to mean ‘scores on the NSSE’ just as National Student Survey scores have become the currency of quality in the UK. The Higher Education Academy has piloted its own short version of the NSSE for use in the UK. Hopefully valid questions will eventually find their way into the National Student Survey because engagement is a better indicator of educational quality than is ‘satisfaction’. The UK government’s proposed Teaching Excellence Framework (TEF) seems likely to use a measure of engagement as a teaching metric as part of its evaluation of teaching quality that will determine the level of fees institutions are allowed to charge students. The effect of this will be immediate: what ‘engagement’ means will be redefined by the TEF and its associated funding implications, and interest in other forms of engagement seems likely to fall away.

Once you have a measure as widely used as the NSSE it is possible to spot what is going on where student engagement with their studies is measured to be especially high (or low). Kuh has summarised this research for the benefit of administrators, pointing out that students who are more engaged with their studies are also more engaged with their institution’s campus, with governance, with volunteering, with student activities, and so on. The assumption has developed that if you can engage students outside of the curriculum then they will also be more engaged inside the curriculum. Sometimes this assumption is based on misunderstanding of statistics, in that while some students are engaged in pretty much everything, others are engaged in nothing: what is being described may be differences between students rather than between courses or institutions. It also seems possible that a student who spends half their time engaged in Student Union activities might have less time to spend on their studies. Nevertheless there is a growing belief underlying some efforts in the UK that engaging students outside of the curriculum will cause engagement with studies, rather than simply compete for students’ time. Some of the activities being touted as engaging students with their campus include employing students part time on campus, even if this only involves burger flipping in the Student Union food hall, so the definition of engagement here is sometimes stretched quite a long way.

At the same time the UK national quality agency, the QAA, have noticed that student involvement with quality assurance, primarily through providing feedback in questionnaires and through student representatives on course committees, does not always work especially well, and so they have set their benchmark somewhat higher for all future institutional audits. They have called what they are auditing ‘student engagement’ and there is a good deal of effort being put into making student engagement in quality assurance work better. For example at Coventry University students administer and interpret student feedback questionnaires themselves. At Winchester, one student per degree programme per year is given a bursary to undertake an educational evaluation that feeds in to annual course review. Whether this has positive impacts on engagement with studies, or on learning gains, is yet to be demonstrated unambiguously, though it probably develops the employability skills of those few students who are course reps or evaluators. The QAA are probably right that better student engagement in quality assurance benefits quality, but claims about the benefits to students who are doing the quality work, let alone their peers who are not, are not yet well founded.

Some institutions have become much more radical and have involved students not just in spotting quality problems, but in solving them: this is ‘engaging students with teaching enhancement’. For example one student per department at Exeter was hired and trained to teach their lecturers how to use Moodle, transforming the use of their virtual learning environment for all kinds of pedagogically worthwhile things in every course in the university within a year – after a decade of limited progress when students did not drive the change. The race is on to find new ways to employ students as change agents, and this too is being described as ‘student engagement’.

There is some evidence that universities with more developed ‘student engagement’ mechanisms, of one kind or another, are improving their NSS scores faster than others. The difficulty in interpreting this evidence is that student engagement means different things in different institutions, and those institutions that are serious about student engagement are probably serious about all kind of other quality enhancement mechanisms, and care about their students, at the same time.

For decades students have also been co-opted into teaching and assessment roles as a part of educational innovations – for example through self and peer assessment, peer tutoring, and peer mentoring. Many such practices used to be called ‘student centred learning’. Some of these ‘engagement with teaching’ practices have been reified into formal systems (e.g. ‘Supplemental Instruction’), marketed all over the world, and researched in detail. In summary you can often produce quite large educational gains when students do for themselves and for each other what teachers previously did for them – and all this is often free. Lincoln even engage students with their teachers in developing the design of the modules they are about to take (a practice I first encountered in Berlin in 1976).

Finally, some institutions are interested primarily in ‘engaging students with research’. For example MIT provides research internships in real research groups for 80% of its undergraduates. Much of this is paid for through research grants, and sometimes students get academic credit for it, but in the main it is outside the normal curriculum: most pedagogic practices in taught courses are unchanged. Nevertheless this undergraduate research opportunities programme (UROP) has had measurable impacts on students’ aspirations to be researchers, amongst other benefits, though benefits to parallel course grades have not yet been identified.

So if you hear a manager banging on about student engagement, ask them to define what they are actually talking about.

Suggested reading

Vicki Trowler (2010) Student engagement literature review. Higher Education Academy.

This ‘#53ideas’ item has been developed from an article by Graham Gibbs published by The Higher, and their permission to use text from that article is gratefully acknowledged.

This post is also available as a PDF

53 Powerful Ideas All Teachers Should Know About

SEDA is publishing online both on its website and on its blog one of Graham Gibbs’ 53 Powerful Ideas All Teachers Should Know About, with the intention of prompting debate about the underlying basis of our work. Graham has also invited a number of well respected international thinkers and writers about university teaching, and how to improve it, to each contribute one idea to the ’53 Powerful ideas’ collection. On the blog we hope that you will comment on and discuss the ideas set out. Once all 53 ideas have been published, the intention is to hand over to our community and publish one idea from members each week, to continue the debate.

We invite you to join the discussion using the comment box below in response to Graham’s question.

At your institution, what does ‘student engagement’ mean?

Are your own students engaged? What do you mean by this?

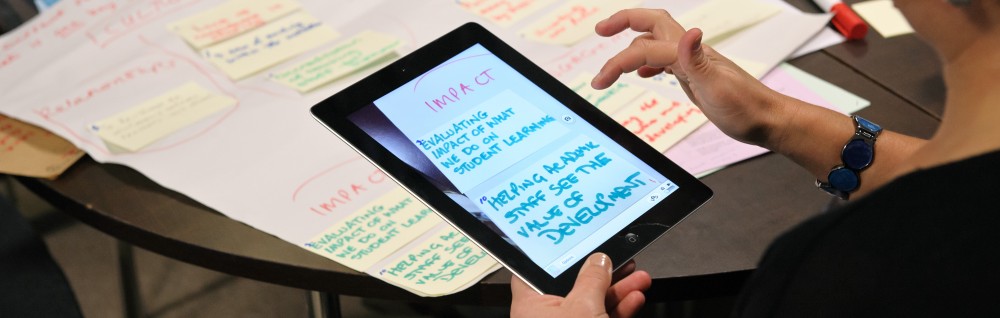

A few years ago, when ‘student engagement’ started to become an important feature of the HE landscape, three of the HEA’s Subject Centres (representing Arts, Humanities and Science) together with the NUS, brought together c. 30 student reps for ‘Engage!’ a two-day residential to explore ‘student engagement’. We started with everyone contributing to the creation of a large map of ‘the student experience’. What struck all of us was that while the map filled quickly with loads of the ‘social engagement’ stuff, there was nothing about teaching & learning until a small bubble appeared at the lower left of the map. It was accompanied by an ‘Oh, of course!’ moment.

That experience reflects, in a small way, what Graham is writing about above. That while we fret about engagement in learning and teaching, and how to measure it, reducing ‘engagement’ to what happens in the lecture theatre, seminar room, studio or lab, and – as an aside – reducing the true complexity of a student’s abilities and experience to grade or number “loses everything important about the individual” (Todd Rose ‘The End of Average’)

LikeLiked by 1 person

Pingback: Latest Library Links 29th January 2016 | Latest Library Links

The above article says;

“There is some evidence that universities with more developed ‘student engagement’ mechanisms, of one kind or another, are improving their NSS scores faster than others”

Does anyone know where this evidence can be found or who has written about it?

LikeLike

Pingback: Graham Gibbs: ‘Student engagement’ is a slippery concept | EDEU

Pingback: Teaching at scale: engagement, assessment and feedback – notes from reading group | Education works